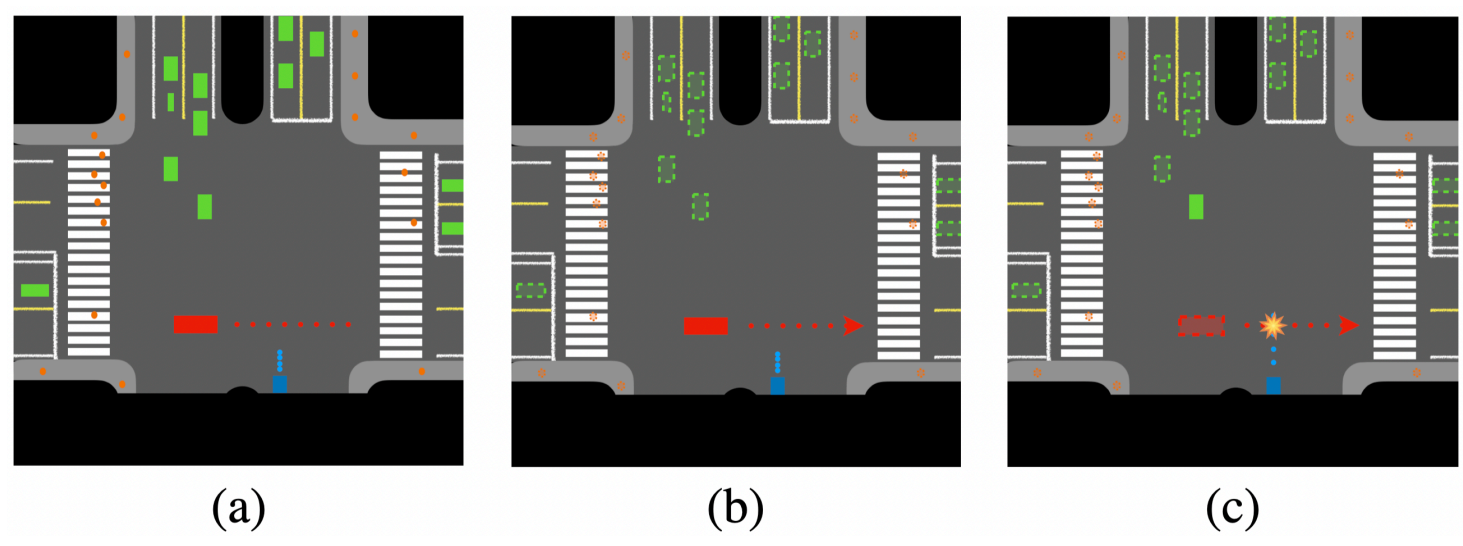

Interaction Types

Our interaction type includes: Interactive: yielding to dynamic risk, Collision: crashing scenario, Obstacle: interacting with static elements, and Non-interactive: normal driving, aiming to cover the different definitions of risks discussed in the community.

Interactive

Collision

Obstacle

Non-interactive

Data Collection

A scenario taxonomy and data augmentation pipeline are developed to collect a range of diverse scenarios in a procedural manner. The taxonomy includes various attributes such as road topology, scenario types, ego vehicle behavior, and traffic participants’ behavior. From this taxonomy, if a scenario script is set, two human subjects can act accordingly. To form the final scenario dataset, we augment the collected scenario by changing attributes, including time of day, weather conditions, and traffic density.

Experiment Setups

Risk Identification Baselines

The baselines take a sequence of historical data as input and output a risk score for each road user (e.g., vehicle or pedestrian) or an unexpected event (e.g., collision or construction zone). We consider a road user or an unexpected event risk if the score exceeds a predefined threshold. We implement 10 risk identification algorithms and categorize them into the following four types.

For training details, please refer to our github.

Evaluation Metrics

We devise three metrics that evaluate the ability of a risk identification algorithm to (1) identify locations of risks, (2) anticipate risks, and (3) facilitate decision-making.

Localization and Anticipation Demo

Qualitative Results For Risk Identification

Fine-grained Scenario-based Analysis

Planning-aware Demo

Temporal Consistency

| 1s | 2s | 3s | |

|---|---|---|---|

| QCNet [4] | 50.2% | 26.9% | 18.5% |

| DSA [5] | 14.0% | 4.6% | 3.8% |

| RRL [6] | 19.0% | 8.5% | 4.7% |

| BP [7] | 4.2% | 2.4% | 1.9% |

| BCP [8] | 6.9% | 3.9% | 3.4% |

Dataset Details

Sensor Suite

We collect camera/depth/instance segmentation images from all front view sensors. Our perspective-view camera has a 120-degree field of view.

In addition, we also collect object bboxs and precise lane mark which can render BEV images ( max pixel per merter: 10 ).

Alongside the camera data, we also collect LiDAR, GNSS, IMU. Our data is captured at a frame rate of 20 Hz.

Scenario Attributes

The following table summarizes all possible values of each taxonomy attribute. The maximum number of Basic Scenario that the proposed taxonomy can describe is 547 which is calculated without considering Map and Area ID.

| Attribute | Value |

|---|---|

| Map | Town01, Town02, Town03, Town05, Town06, Town07, Town10HD, A0, A1, A6, B3, B7, B8 |

| Road topology | forward, left turn, right turn, u-turn, lane change left, right lane change |

| Interaction type | interactive, collision, obstacle, non-interactive |

| Interacting agent type | car, truck, bicyclist, motorcyclist, pedestrian |

| Interacting agent's behavior | forward, left turn, right turn, u-turn, lane change left, right lane change, crosswalking, jaywalking, go into roundabout, go around roundabout, exit roundabout |

| Obstacle type | traffic cone, barrier, warning, illegal parking vehicle |

| Traffic violation | Running red light, ignoring stop sign, driving on sidewalk, jay-walker |

| Ego's reaction | right-deviation, left-deviation |

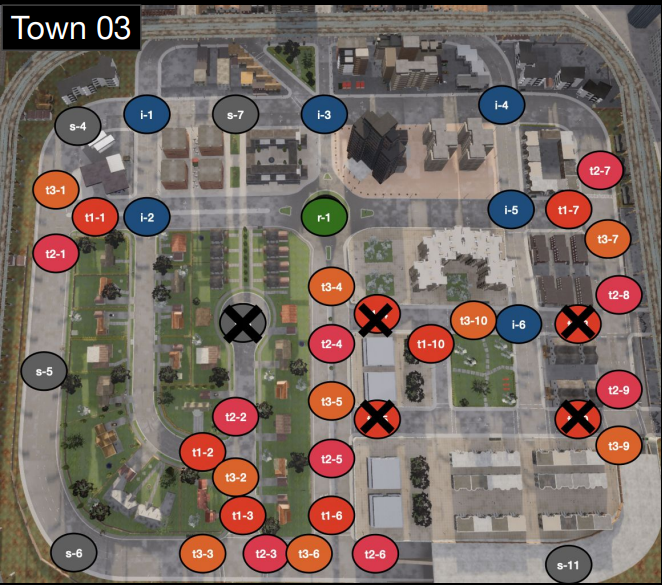

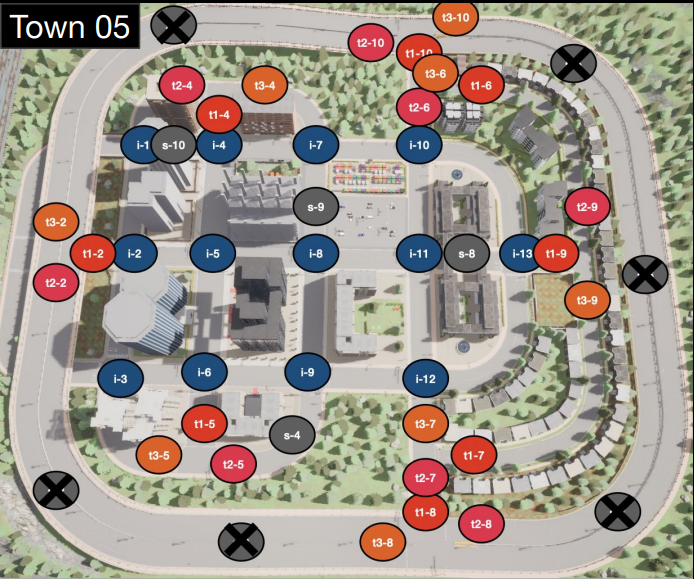

Labeled Area for Data Collection

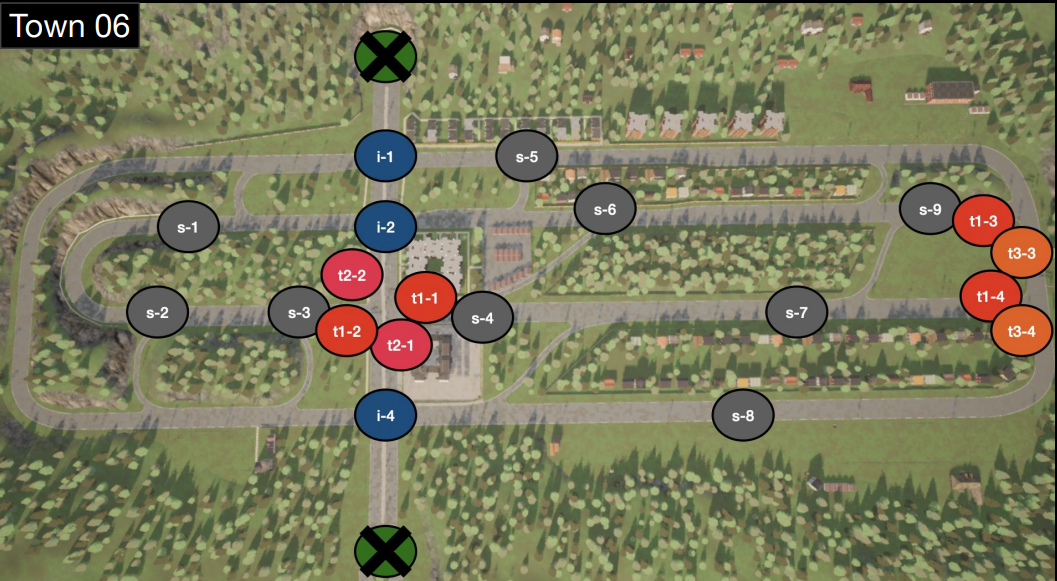

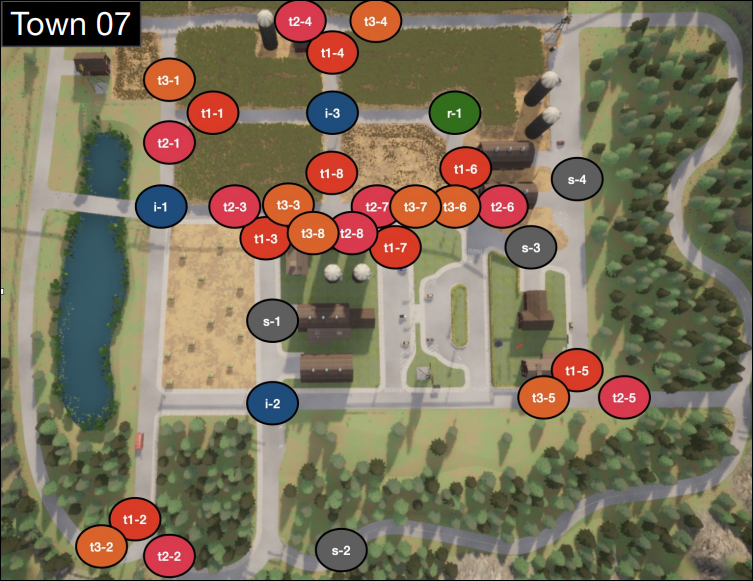

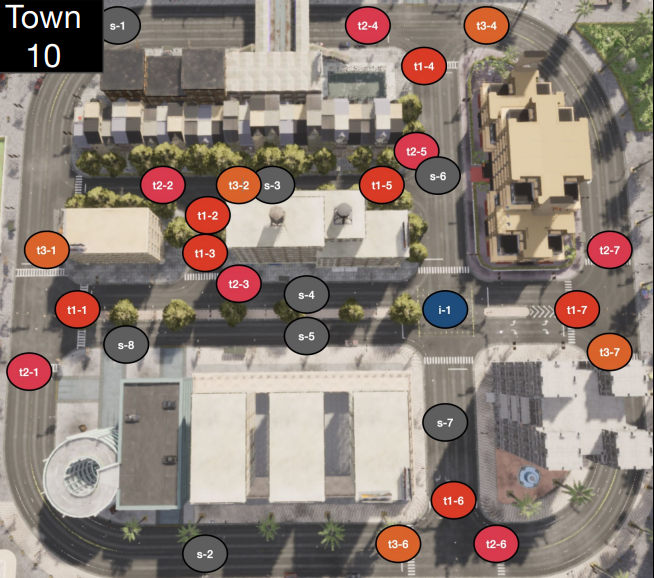

Maps from CARLA simulator. The notations ’i’, ’t1’, ''t2', 't3', ’s’, and ’r’ indicate 4-way intersection, T-intersection-A, T-intersection-B, T-intersection-C, straight, and roundabout.

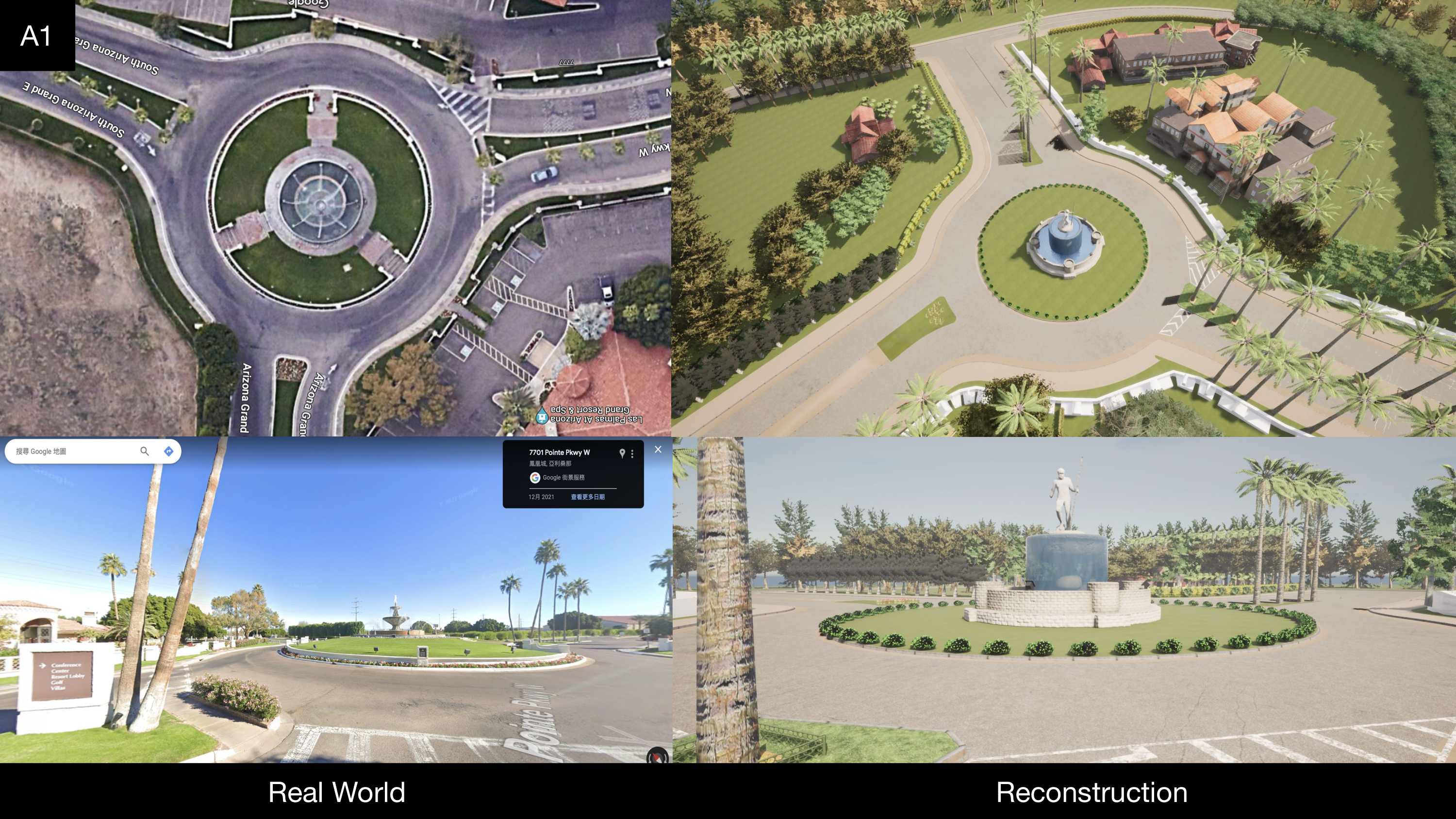

Additional Maps from Real World. To overcome the limited number of roundabouts in CARLA, we incorporate real-world maps from CAROM-Air [9] and reconstruct them in the CARLA simulator. Specifically, we select A0, A1, A6, B3, B7, B8 from CAROM-Air.

Dataset Splits

| Interactive | Collision | Obstacle | Non-interactive | |

|---|---|---|---|---|

| Training | 925 | 1044 | 850 | 1023 |

| Validation | 348 | 283 | 258 | 496 |

| Testing | 521 | 375 | 322 | 471 |